2025-12-10 13:40:56

1. Benchmark Execution Summary

1.1. Session

-

Hostname: gaya

-

User: gaya

-

Time Start: 20251210T134100+0100

-

Time End: 20251211T001706+0100

1.3. Parametrization

| Hash | resources.tasks | memory | mesh | discretization | solver | Total Time (s) | |||

|---|---|---|---|---|---|---|---|---|---|

🟢 |

167af2e7 |

32 |

50 |

M1 |

P1 |

gamg |

5603.943 |

||

🟢 |

176d3fed |

4 |

200 |

M1 |

P3 |

gamg |

3206.041 |

||

🟢 |

17975aa7 |

64 |

400 |

M3 |

P1 |

gamg |

6032.827 |

||

🟢 |

23cd5463 |

128 |

200 |

M1 |

P2 |

gamg |

6090.893 |

||

🟢 |

244b6f4f |

16 |

50 |

M2 |

P1 |

gamg |

5572.734 |

||

🟢 |

27195a9a |

2 |

50 |

M2 |

P1 |

gamg |

1941.177 |

||

🔴 |

2e057b04 |

1 |

1200 |

M2 |

P3 |

gamg |

1753.755 |

||

🟢 |

2fee09d5 |

64 |

50 |

M2 |

P1 |

gamg |

5911.237 |

||

🟢 |

32beb7e3 |

1 |

200 |

M2 |

P2 |

gamg |

1501.813 |

||

🟢 |

345604ad |

8 |

200 |

M1 |

P3 |

gamg |

4693.128 |

||

🟢 |

39768c0a |

64 |

1200 |

M2 |

P3 |

gamg |

37472.491 |

||

🟢 |

3f3de4ff |

8 |

50 |

M1 |

P1 |

gamg |

3295.840 |

||

🟢 |

3fb44e10 |

4 |

200 |

M1 |

P2 |

gamg |

3122.187 |

||

🟢 |

45824f95 |

32 |

200 |

M2 |

P2 |

gamg |

5780.014 |

||

🟢 |

4d73eb1b |

128 |

200 |

M1 |

P3 |

gamg |

6127.138 |

||

🟢 |

4f0f94c0 |

16 |

200 |

M1 |

P3 |

gamg |

5213.542 |

||

🟢 |

5349842d |

384 |

400 |

M3 |

P1 |

gamg |

38052.912 |

||

🟢 |

5604fc20 |

256 |

400 |

M3 |

P1 |

gamg |

37157.406 |

||

🟢 |

5a008286 |

32 |

1200 |

M2 |

P3 |

gamg |

36970.633 |

||

🟢 |

5c01ce2b |

8 |

200 |

M1 |

P2 |

gamg |

4629.449 |

||

🟢 |

62f13b88 |

128 |

50 |

M1 |

P1 |

gamg |

6063.780 |

||

🟢 |

6311f485 |

32 |

400 |

M3 |

P1 |

gamg |

5899.037 |

||

🟢 |

688bd837 |

4 |

50 |

M2 |

P1 |

gamg |

3254.202 |

||

🟢 |

6892f9ed |

16 |

50 |

M1 |

P1 |

gamg |

4765.445 |

||

🟢 |

68d6fab9 |

64 |

1200 |

M3 |

P2 |

gamg |

37716.317 |

||

🟢 |

693cb2e9 |

128 |

400 |

M3 |

P1 |

gamg |

36574.423 |

||

🟢 |

69498aae |

4 |

200 |

M2 |

P2 |

gamg |

3539.596 |

||

🟢 |

6de50075 |

8 |

200 |

M2 |

P2 |

gamg |

4898.058 |

||

🟢 |

6f8fe105 |

2 |

50 |

M1 |

P1 |

gamg |

1528.769 |

||

🟢 |

7785a035 |

128 |

1200 |

M2 |

P3 |

gamg |

37757.479 |

||

🟢 |

7cd50510 |

1 |

200 |

M1 |

P3 |

gamg |

405.949 |

||

🟢 |

850da4f0 |

1 |

50 |

M1 |

P1 |

gamg |

55.464 |

||

🟢 |

8531d310 |

1 |

50 |

M2 |

P1 |

gamg |

531.810 |

||

🟢 |

956d2d83 |

64 |

200 |

M1 |

P2 |

gamg |

5847.395 |

||

🔴 |

9656e45f |

2 |

200 |

M2 |

P2 |

gamg |

2154.733 |

||

🔴 |

98df175a |

4 |

1200 |

M2 |

P3 |

gamg |

36465.514 |

||

🟢 |

9c0c73a6 |

128 |

200 |

M2 |

P2 |

gamg |

6264.085 |

||

🟢 |

a2abc5fb |

32 |

50 |

M2 |

P1 |

gamg |

5684.020 |

||

🟢 |

a78765f5 |

16 |

200 |

M2 |

P2 |

gamg |

5631.239 |

||

🟢 |

a9eabded |

4 |

50 |

M1 |

P1 |

gamg |

3102.085 |

||

🟢 |

af27eff5 |

256 |

1200 |

M3 |

P2 |

gamg |

37997.657 |

||

🔴 |

b02bfe9c |

8 |

1200 |

M2 |

P3 |

gamg |

36701.205 |

||

🟢 |

b4ac4784 |

1 |

200 |

M1 |

P2 |

gamg |

128.562 |

||

🟢 |

ba32da44 |

64 |

50 |

M1 |

P1 |

gamg |

5816.028 |

||

🔴 |

c19ec7ad |

2 |

1200 |

M2 |

P3 |

gamg |

3084.710 |

||

🟢 |

c8addc44 |

16 |

200 |

M1 |

P2 |

gamg |

4801.674 |

||

🟢 |

c91ae1d8 |

32 |

1200 |

M3 |

P2 |

gamg |

37400.100 |

||

🟢 |

ccdebbea |

128 |

50 |

M2 |

P1 |

gamg |

6190.901 |

||

🟢 |

d0631d6d |

64 |

200 |

M1 |

P3 |

gamg |

5883.660 |

||

🟢 |

d917cf05 |

128 |

1200 |

M3 |

P2 |

gamg |

37901.608 |

||

🟢 |

de8f4f1b |

32 |

200 |

M1 |

P2 |

gamg |

5635.676 |

||

🔴 |

e853ec5e |

2 |

200 |

M1 |

P3 |

gamg |

1711.795 |

||

🟢 |

f1d5f83f |

384 |

1200 |

M3 |

P2 |

gamg |

38163.210 |

||

🟢 |

f29397bf |

2 |

200 |

M1 |

P2 |

gamg |

1601.889 |

||

🟢 |

fa4f1061 |

64 |

200 |

M2 |

P2 |

gamg |

5959.450 |

||

🟢 |

fc15b253 |

8 |

50 |

M2 |

P1 |

gamg |

4729.373 |

||

🔴 |

fd0b630b |

16 |

1200 |

M2 |

P3 |

gamg |

36826.805 |

||

🟢 |

ff585e9d |

32 |

200 |

M1 |

P3 |

gamg |

5683.963 |

2. Benchmark: Elliptic linear PDE: Thermal Bridges

2.1. Description

The benchmark known as "thermal bridges" is an example of an application that enables us to validate numerical simulation tools using Feel++. We have developed tests based on the ISO 10211:2017 standard (ISO 10211:2017 - Thermal bridges in building construction — Heat flows and surface temperatures — Detailed calculations, 2017), which provides methodologies for evaluating thermal bridges in building construction.

Thermal bridges are areas within a building envelope where heat flow is different compared to adjacent areas, often resulting in increased heat loss or unwanted condensation. The standard is intended to ensure that thermal bridges simulation are accurately computed. It provides reference values (and tolerance) on heat temperature and heat flux at several location of the geometry.

At the mathematical level, this application requires finding the numerical solution of an elliptic linear PDE (i.e. the heat equation). We employ a finite element method based on continuous Lagrange Finite Element of order 1,2 and 3 (denoted by P1,P2,P3). And we analyzed the execution time of the main components of the simulation.

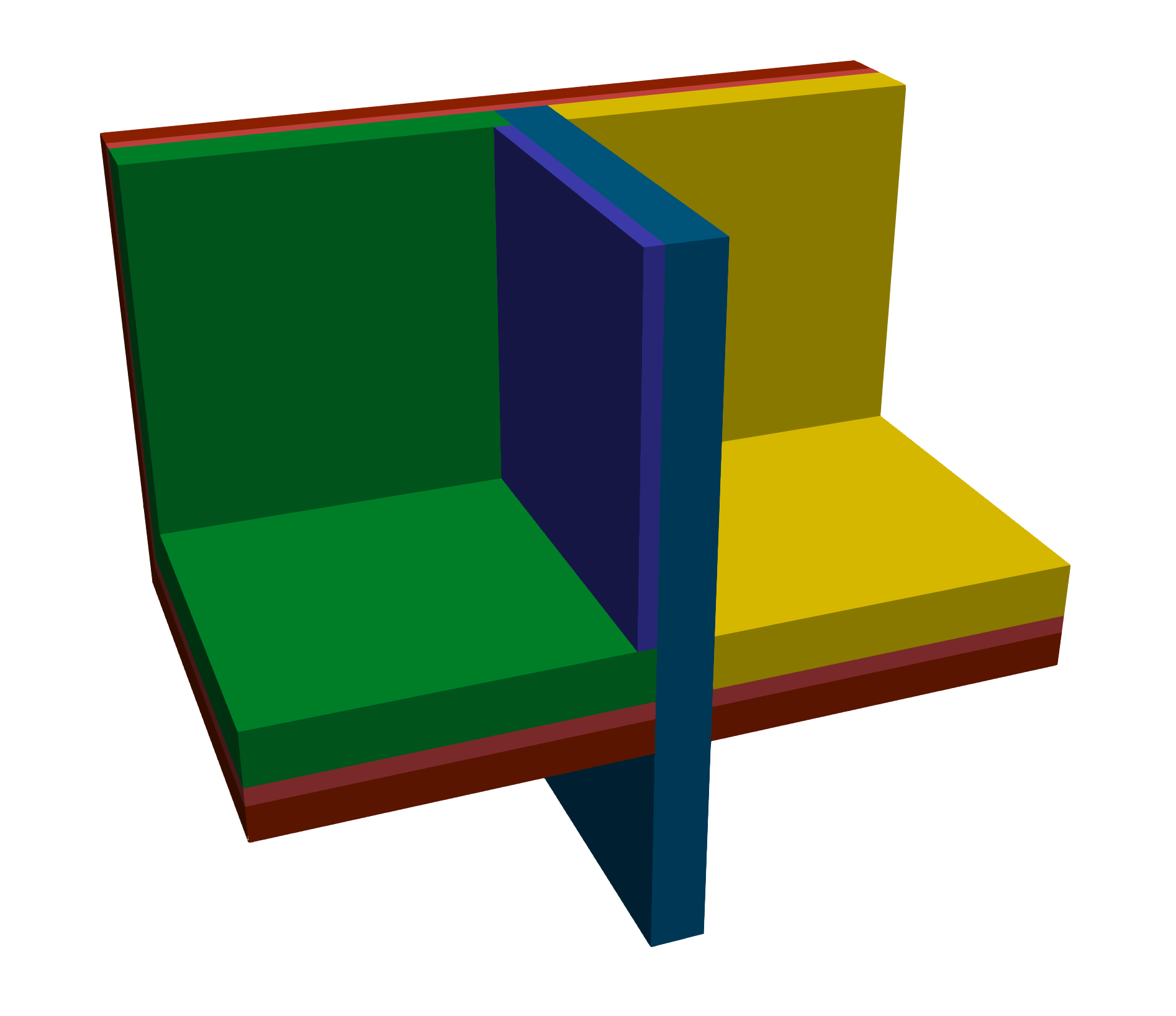

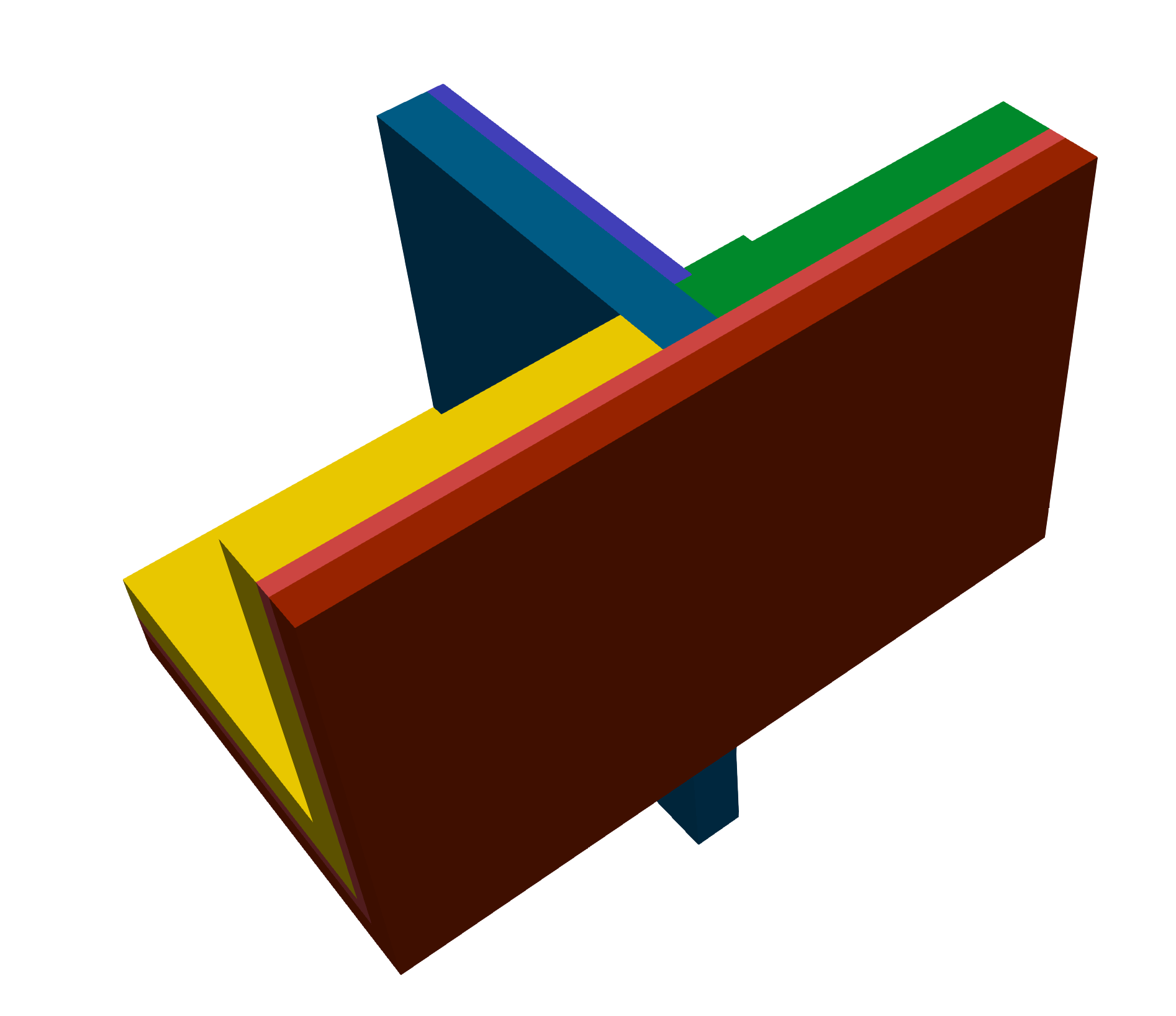

The Figure 4.3 represents the geometry of this benchmark and the domain decomposition by material.

2.2. Benchmarking Tools Used

The benchmark was performed on the gaya supercomputer (see Section 10.1). The performance tools integrated into the Feel-toolboxes framework were used to measure the execution time. Moreover, we need to note that we have used here Apptainer with Feel SIF image based on Ubuntu noble OS.

This benchmark was done using feelpp.benchmarking, version 4.0.0

The metrics measured are the execution time of the main components of the simulation. We enumerate these parts in the following:

-

Init: load mesh from filesystem and initialize heat toolbox (finite element context and algebraic data structure)

-

Assembly: calculate and assemble the matrix and rhs values obtained using the finite element method

-

Solve: the linear system by using a preconditioned GMRES.

-

PostProcess: compute validation measures (temperature at points and heat flux) and export on the filesystem a visualization format (EnsighGold) of the solution.

2.3. Input/Output Dataset Description

2.3.1. Input Data

-

Meshes: We have generated three levels of mesh called M1, M2 and M3. These meshes are stored in GMSH format. The statistics can be found in Table 4.6. We have also prepared for each mesh level a collection of partitioned mesh. The format used is an in-house mesh format of Feel based on JSON+HDF5 file type. The Gmsh meshes and the partitioned meshes can be found on our Girder database management, in the Feel collections.

-

Setup: Use standard setup of Feel++ toolboxes. It corresponds to a cfg file and JSON file. These config files are present in the Github of feelpp.

-

Sif image: feelpp:v0.111.0-preview.10-noble-sif (stored in the Github registry of Feel++)

| Tag | # points | # edges | # faces | # elements | P1 | P2 | P3 |

|---|---|---|---|---|---|---|---|

M1 |

1.94E+05 |

1.30E+06 |

2.46E+06 |

1.06E+06 |

1.94E+05 |

1.49E+06 |

4.96E+06 |

M2 |

1.40E+06 |

9.78E+06 |

1.66E+07 |

1.66E+07 |

1.40E+06 |

1.12E+07 |

3.75E+07 |

M3 |

1.06E+07 |

7.53E+07 |

1.29E+08 |

1.29E+08 |

1.06E+07 |

8.59E+07 |

2.90E+08 |

2.4. Results Summary

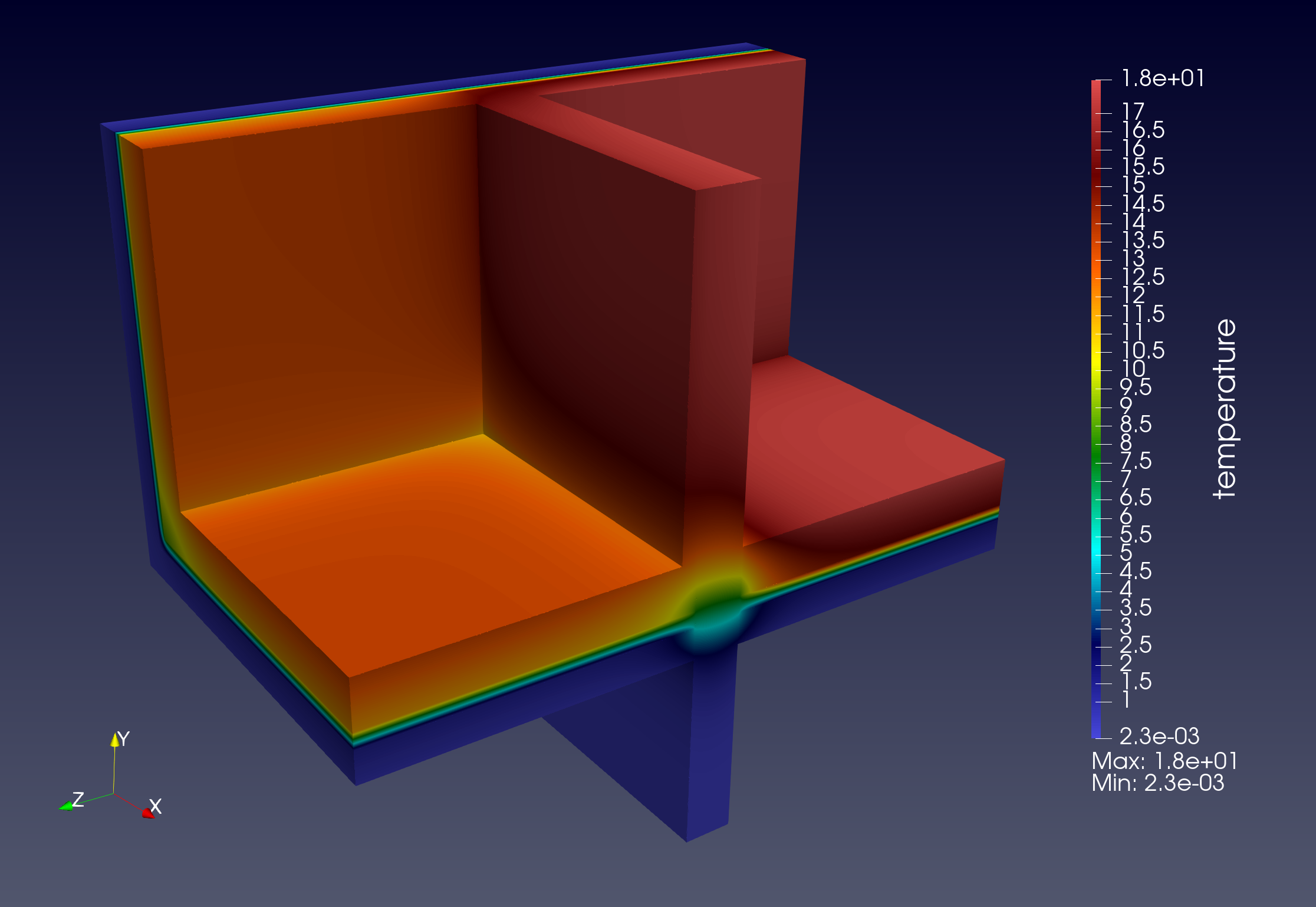

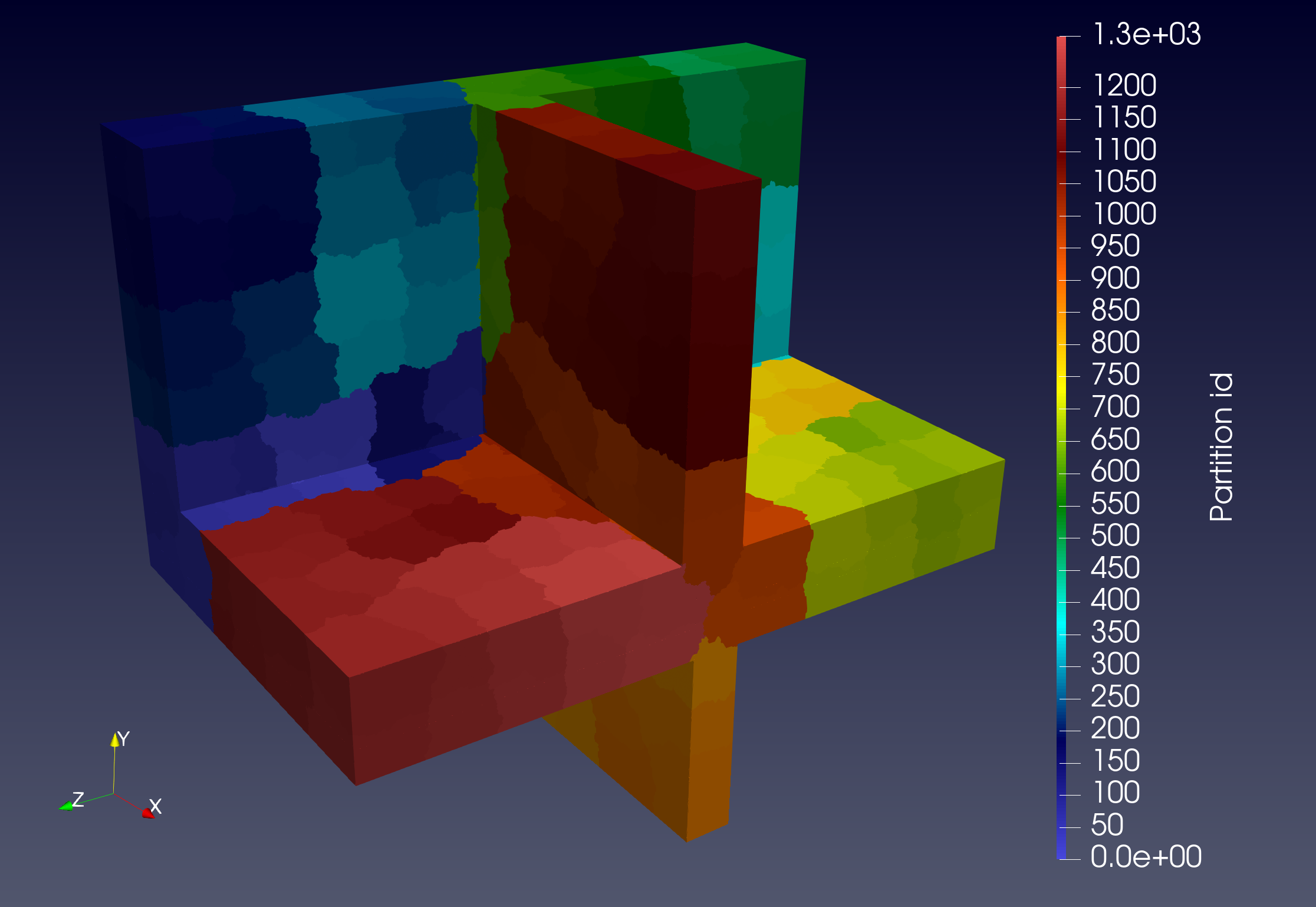

We start by showing in fig. 4.4 an example of numeric solution and mesh partitioning that we have obtained in the simulation pipeline. The partitioning process is considered an offline process here but requires some time and memory consumption. This should be explicitly described in a future work. With fig. 4.5, we have validated the simulation run by checking measures compared to reference values.

The benchmark performance results are summarized in Figure 4.6, Figure 4.7, Figure 4.8 which correspond respectively to choice of the mesh M1, M2 and M3. Moreover, for each mesh, we have experimented with several finite element discretizations called P1, P2 and P3. For each order of finite element approximation, we have selected a set of number of CPU cores. Concerning the mesh M1, considered a coarse mesh, we note that the scalability scaling is not good, especially for low order. This is simply because the problem is too small for so many HPC resources. MPI communications and IO effects are non-negligible. For the mesh M2 and M3, results are better (but not ideal), and we can rapidly see the limit reached by the scalability test. Finally, the fined mesh M3, illustrates the best scalability on this benchmarking experiment. We see a reduction in computational cost by increasing the computation resources. However, due to the fast execution, the time goes fast to the limit.

With these benchmarking experiences, we have also seen that we have some variability in performance measures. Some aspects such as the filesystem and network load, are not under our control, it can explain a part of this (when computational time belongs small locally).

2.5. Challenges Identified

Several challenges were encountered during the benchmarking process:

-

Memory Usage: Reduce the memory footprint

-

Parallelization Inefficiencies: Understand and improve performance when MPI communication and filesystem IO will be dominant

To conclude, we have realized HPC performance tests of benchmark called thermal bridges. We have realized with success the execution of several simulations on significant resources and demonstrated the validation of Feel framework in the elliptic PDE context. We have also validated the deployment of Feel with container support. Now, we need to provide more refined measures to detect and analyze reasons for performance degradation. And also compare to other software installations, like Spack.

2.6. Results

2.6.1. Convergence of validation measures

2.6.5. Solver Metrics

To understand the parallel scalability, two key solver metrics are presented: the absolute execution time for the algebraic solve step and the number of iterations required by the GMRES solver. Stable or decreasing iteration counts with mesh refinement and strong scaling in solve time are essential indicators of an efficient preconditioner and solver configuration.