4. Hands-on (Session 2)

1. Benchmarking a Feel++ toolboxes on Karolina

Before you start this hands-on exercise, you need to follow the steps below to set up your environment in Karolina.

1.1. Pre-requisites

|

YOu must have set up feelpp.benchmarking beforehand. If not, please follow the prerequisites section of this exercise |

-

Load the required modules:

module load Python/3.12.3-GCCcore-13.3.0

module load nodejs/20.13.1-GCCcore-13.3.0-

Source the existing Python virtual environment:

Make sure you are located in the directory where you have set up feelpp.benchmarking.

source .venv/bin/activate1.2. The Feel++ Heat Transfer toolbox

In this exercice, we will benchmark the thermal bridges use case using of the Feel++ Heat transfer toolbox on Karolina.

1.2.1. What is Feel++ ?

Feel is an Open-Source C library which allows to solve a large range of partial differential equations using Galerkin methods, e.g. finite element method, spectral element method, discontinuous Galerkin methods or reduced basis methods.

You can find more information on the Heat Transfer toolbox in this link

1.2.2. What is The Thermal Bridges benchmark

The benchmark known as "thermal bridges" is an example of an application that enables us to validate numerical simulation tools using Feel++. We have developed tests based on the ISO 10211:2017 standard, which provides methodologies for evaluating thermal bridges in building construction.

Thermal bridges are areas within a building envelope where heat flow is different compared to adjacent areas, often resulting in increased heat loss or unwanted condensation. The standard is intended to ensure that thermal bridges simulation are accurately computed.

At the mathematical level, this application requires finding the numerical solution of an elliptic linear PDE (i.e. the heat equation). We employ a finite element method based on continuous Lagrange Finite Element of order 1,2 and 3 (denoted by P1,P2,P3). And we analyzed the execution time of the main components of the simulation.

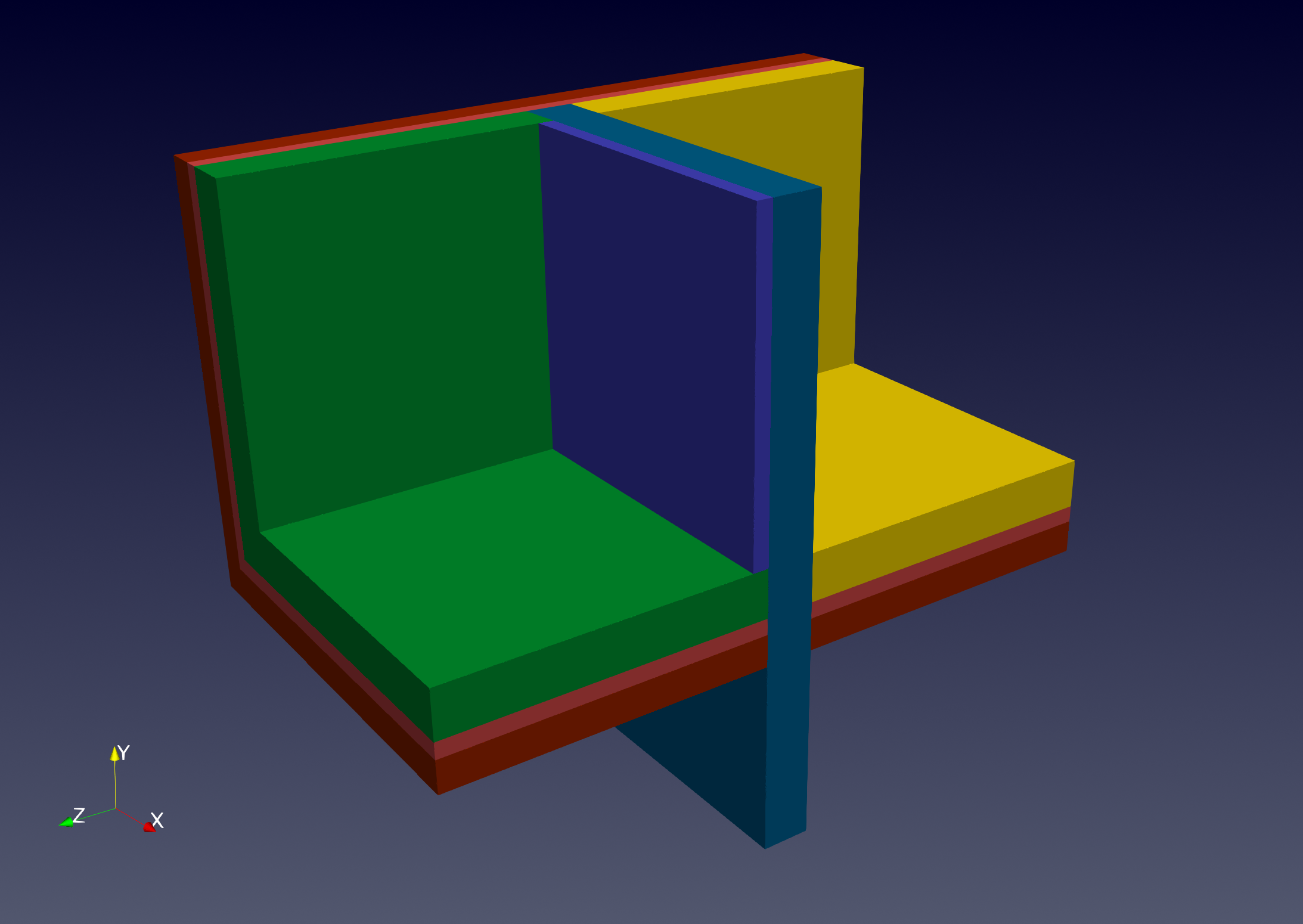

Below, is an image showing the geometry and materials of the input mesh used in this benchmark.

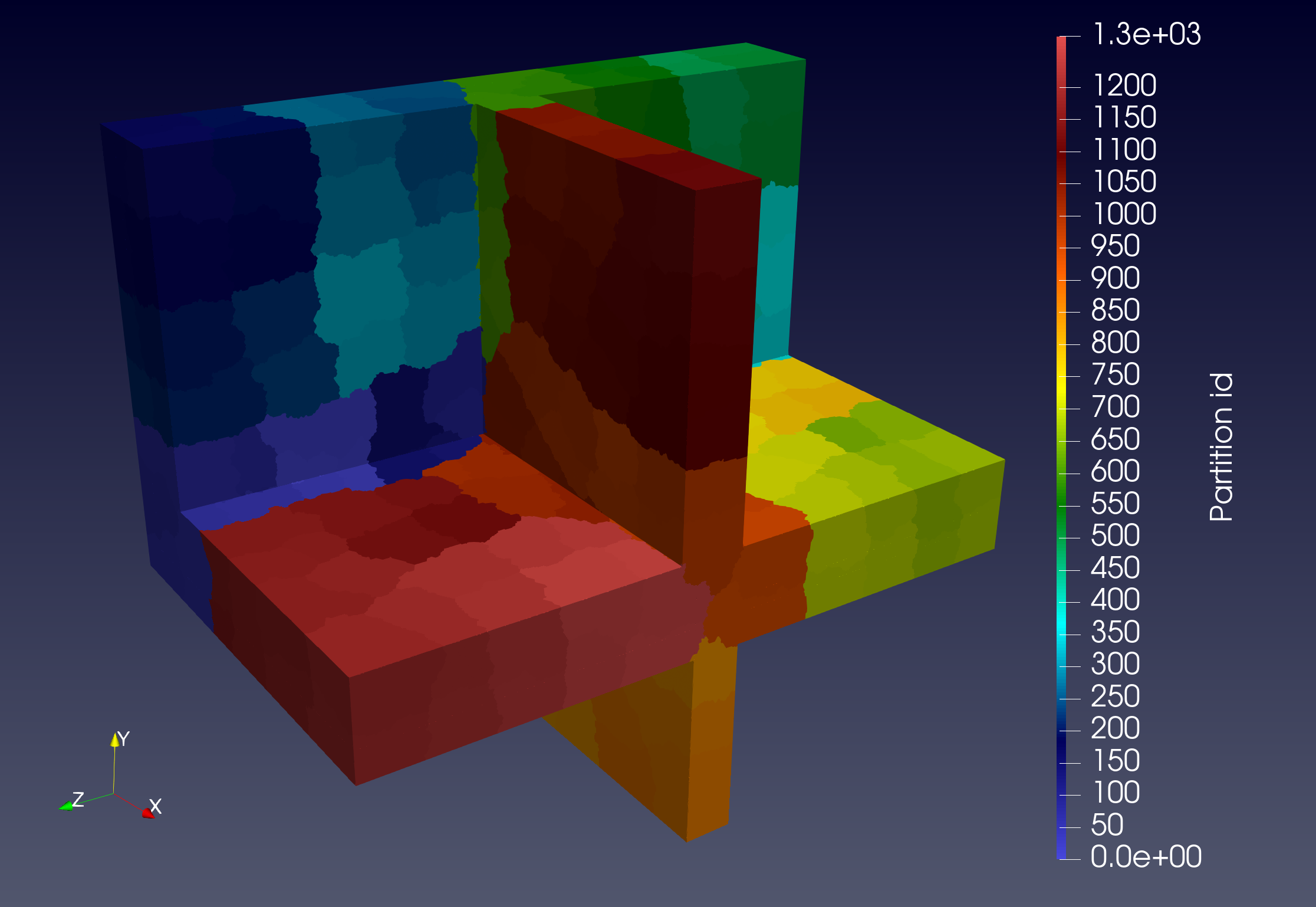

The following image shows an example of the mesh partitioning, which is done beforehand.

1.3. Modifying Karlina’s specification file

As this benchmark is a little bit different than what we did in the previous exercices. The machine specifications file needs to be adapted.

Solution

{

"targets":["qcpu:apptainer:default"],

"containers":{

"apptainer":{

"image_base_dir":"/scratch/project/dd-24-88/benchmarking/inputdata/images",

"options":[]

}

}

}

You can use the $USER environment variable.

|

Solution

{

"reports_base_dir":"$PWD/reports",

"input_dataset_base_dir":"/scratch/project/dd-24-88/benchmarking/inputdata",

"reframe_base_dir":"/scratch/project/dd-24-88/benchmarking/participants/$USER/reframe",

"output_app_dir":"/scratch/project/dd-24-88/benchmarking/participants/$USER",

}In theory, the output_app_dir directory should exist before launching the applicaiton, but we can use a little trick to let feelpp.benchmarking create this directory : we set "reframe_base_dir":"/scratch/project/dd-24-88/benchmarking/participants/$USER/reframe" so that ReFrame creates the directory before running the tests.

You can see that under access, we have specified --mem=200G. This is actually just a bug workaround concerning SLURM configuration on Karolina.

|

1.4. Application setup

We will benchmark this use case using a SIF image. The image is already located in Karolina, under /scratch/project/dd-24-88/benchmarking/inputdata/images/feelpp-noble.sif.

And the Feel++ toolbox can be executed inside the container using feelpp_toolbox_heat.

Start by creating a benchmark specification file, we will add fields to it on each exercice.

Solution

{

"executable": "feelpp_toolbox_heat",

"use_case_name": "ThermalBridges",

"timeout":"0-00:10:00",

}Solution

{

"platforms": {

"apptainer":{

"image": {

"filepath":"{{machine.containers.apptainer.image_base_dir}}/feelpp-noble.sif"

},

"input_dir":"/input_data",

"options": [

"--home {{machine.output_app_dir}}",

"--bind {{machine.input_dataset_base_dir}}/:{{platforms.apptainer.input_dir}}",

"--env OMP_NUM_THREADS=1"

],

"append_app_option":[]

}

}

}Solution

Add a builtin field to the platforms field. Now other fields can access input data directory based on the machine defined platform, using {{platforms.{{machine.platform}}.input_dir}}

"builtin":{

"input_dir":"{{machine.input_dataset_base_dir}}",

"append_app_option":[]

}1.5. Input data

The shared folder /scratch/project/dd-24-88/benchmarking/inputdata/ThermalBridges/ contains all necessary input dependencies for the application.

It is structured as follows:

-

ThermalBridges/-

M1/: mesh level 1 -

M2/: mesh level 2 -

gamg.cfg: configuration file for the Generalized Geometric-Algebraic MultiGrid solver

-

Each mesh folder contains a collection of partitionned meshes that use a Feel++ in-house format (JSON+HDF5).

The partitionned files are named case3_p*.h5 and case3_p*.json, where * is the number of partitions.

| Running the test cases for the FEM order P3 and the mesh level 3 can take some time, so we will not use them in this session. |

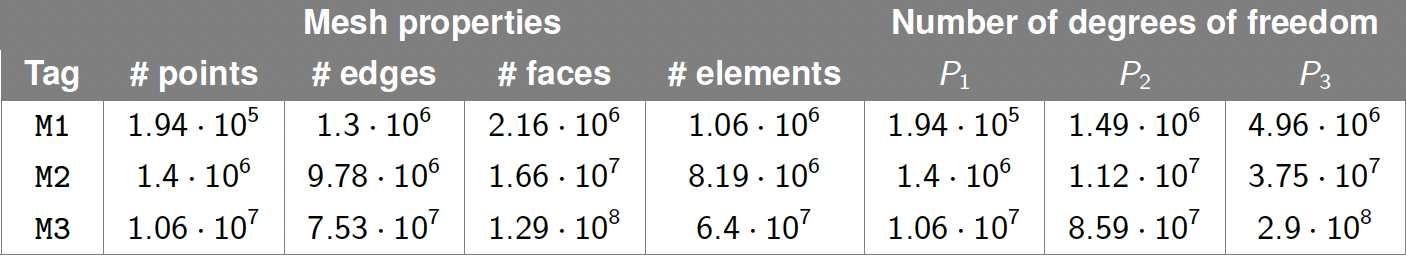

The following table shows the statistics on meshes and number of degrees of freedom with respect to the finite element approximation.

1.5.1. Resources

Solution

{

"resources":{

"tasks":"{{parameters.tasks.value}}",

},

"parameters": [

{

"name":"tasks",

"sequence":[32,64,128]

}

]

}1.5.2. Parameters

Solution

{

"parameters": [

{

"name":"tasks",

"sequence":[32,64,128]

},

{

"name":"mesh",

"sequence":["M1","M2"]

},

{

"name":"discretization",

"sequence":["P1","P2"]

}

]

}1.5.3. Memory

After some debugging, we have noticed that tests for the different mesh - discretization parameter combinations require different amounts of memory.

| qcpu nodes have 256GB of available memory. |

Memory Solution 1

We can directly specify the parameter combinations inside a parameter, as a sequence.

{

"resources":{

"tasks":"{{parameters.tasks.value}}",

"exclusive_access":true,

"memory":"{{parameters.geometry.memory.value}}"

},

"parameters": [

{

"name":"tasks",

"sequence":[32,64,128]

},

{

"name":"geometry",

"sequence":[

{"mesh":"M1", "discretization":"P1", "memory":100},

{"mesh":"M2", "discretization":"P1", "memory":200},

{"mesh":"M1", "discretization":"P2", "memory":300},

{"mesh":"M2", "discretization":"P2", "memory":300},

]

}

]

}Memory Solution 2

We can use parameter conditions, this way we do not need to specify all possible combinations.

{

"resources":{

"tasks":"{{parameters.tasks.value}}",

"exclusive_access":true,

"memory":"{{parameters.memory.value}}"

},

"parameters": [

{

"name":"tasks",

"sequence":[32,64,128]

},

{

"name":"mesh",

"sequence":["M1","M2"]

},

{

"name":"discretization",

"sequence":["P1","P2"]

},

{

"name":"memory",

"sequence":[100, 200, 300],

"conditions":{

"100": [ { "discretization": ["P1"], "mesh": ["M1"] } ],

"200": [ { "discretization": ["P1"], "mesh": ["M2"] } ],

"300": [ { "discretization": ["P2"] } ]

}

}

]

}1.5.4. Setting input paths

Now that the parameters are set, and we understand how the input data is structured, we can proceed to specifying the input files for each test case.

Solution

{

"input_file_dependencies":{

"solver_cfg":"ThermalBridges/gamg.cfg",

"mesh_json":"ThermalBridges/{{parameters.mesh.value}}/case3_p{{parameters.tasks.value}}.json",

"mesh_hdf5":"ThermalBridges/{{parameters.mesh.value}}/case3_p{{parameters.tasks.value}}.h5"

}

}Solution

{

"variables":{

"solver":"gamg"

},

"input_file_dependencies":{

"solver_cfg":"ThermalBridges/{{variables.solver}}.cfg",

}

}1.6. Outputs

For this session, we require all application outputs to be written on a user-dependent directory.

Solution

{

"output_directory": "{{machine.output_app_dir}}/ThermalBridges"

}1.6.1. Application outputs

The toolbox exports the following files, depending on the specified output directory passed as an argument.

The files have a special format, that we will call "tsv". It is actually supported in feelpp.benchmarking. Use this format for scalability files.

- heat.scalibility.HeatConstructor.data

-

Contains the following columns: "initMaterialProperties","initMesh","initFunctionSpaces", "initPostProcess","graph","matrixVector","algebraicOthers"

- heat.scalibility.HeatPostProcessing.data

-

Contains only this column: "exportResults"

- heat.scalibility.HeatSolve.data

-

Contains the columns: "algebraic-assembly","algebraic-solve", "ksp-niter"

- heat.measures/values.csv

-

-

Contains these columns for heat-flows "Normal_Heat_Flux_alpha", "Normal_Heat_Flux_beta", "Normal_Heat_Flux_gamma"

-

And these columns for temperature: "Points_alpha_max_field_temperature", "Points_alpha_min_field_temperature", "Points_beta_max_field_temperature", "Points_beta_min_field_temperature"

-

Solution

{

"scalability": {

"directory": "{{output_directory}}/{{instance}}/{{use_case_name}}",

"clean_directory":true,

"stages": [

{

"name": "Constructor",

"filepath": "heat.scalibility.HeatConstructor.data",

"format": "tsv"

},

{

"name": "PostProcessing",

"filepath": "heat.scalibility.HeatPostProcessing.data",

"format": "tsv"

},

{

"name": "Solve",

"filepath": "heat.scalibility.HeatSolve.data",

"format": "tsv",

"units":{

"*":"s",

"ksp-niter":"iter"

}

},

{

"name":"Outputs",

"filepath": "heat.measures/values.csv",

"format": "csv",

"units":{

"*":"W"

}

}

]

}

}Solution

{

"scalability":{

"custom_variables": [

{

"name":"Total",

"columns":["Constructor_init","Solve_solve","PostProcessing_exportResults" ],

"op":"sum",

"unit":"s"

}

]

}

}1.6.2. Additional files

The Heat toolbox builds some Asciidoc files containing the description of the current test. We can include this in the report. These files can be found in {{output_directory}}/{{instance}}/{{use_case_name}}/heat.information.adoc.

Also, we can include some logfiles that are located under {{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.INFO and {{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.WARNING.

Solution

{

"additional_files":{

"parameterized_descriptions_filepath":"{{output_directory}}/{{instance}}/{{use_case_name}}/heat.information.adoc",

"custom_logs":[

"{{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.INFO",

"{{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.WARNING"

]

}

}1.7. Options

Now that necessary fields are set, we should set the application options depending on the parameter values.

{

"options": [

"--config-files /usr/share/feelpp/data/testcases/toolboxes/heat/cases/Building/ThermalBridgesENISO10211/case3.cfg","{{platforms.{{machine.platform}}.input_dir}}/{{input_file_dependencies.solver_cfg}}",

"--directory {{output_directory}}/{{instance}}",

"--repository.case {{use_case_name}}",

"--heat.scalability-save=1",

"--repository.append.np 0",

"--case.discretization {{parameters.discretization.value}}",

"--heat.json.patch='{\"op\": \"replace\",\"path\": \"/Meshes/heat/Import/filename\",\"value\": \"{{platforms.{{machine.platform}}.input_dir}}/{{input_file_dependencies.mesh_json}}\" }'"

]

}1.8. Summary

Complete benchmark specification

{

"executable": "feelpp_toolbox_heat",

"use_case_name": "ThermalBridges",

"timeout":"0-00:10:00",

"output_directory": "{{machine.output_app_dir}}/ThermalBridges",

"resources":{

"tasks":"{{parameters.tasks.value}}",

"exclusive_access":true,

"memory":"{{parameters.memory.value}}"

},

"input_file_dependencies":{

"solver_cfg":"ThermalBridges/{{fixed.solver}}.cfg",

"mesh_json":"ThermalBridges/{{parameters.mesh.value}}/case3_p{{parameters.tasks.value}}.json",

"mesh_hdf5":"ThermalBridges/{{parameters.mesh.value}}/case3_p{{parameters.tasks.value}}.h5"

},

"platforms": {

"apptainer":{

"image": {

"filepath":"{{machine.containers.apptainer.image_base_dir}}/feelpp-noble.sif"

},

"input_dir":"/input_data",

"options": [

"--home {{machine.output_app_dir}}",

"--bind {{machine.input_dataset_base_dir}}/:{{platforms.apptainer.input_dir}}",

"--env OMP_NUM_THREADS=1"

],

"append_app_option":[]

},

"builtin":{

"input_dir":"{{machine.input_dataset_base_dir}}",

"append_app_option":[]

}

},

"options": [

"--config-files /usr/share/feelpp/data/testcases/toolboxes/heat/cases/Building/ThermalBridgesENISO10211/case3.cfg","{{platforms.{{machine.platform}}.input_dir}}/{{input_file_dependencies.solver_cfg}}",

"--directory {{output_directory}}/{{instance}}",

"--repository.case {{use_case_name}}",

"--heat.scalability-save=1",

"--repository.append.np 0",

"--case.discretization {{parameters.discretization.value}}",

"--heat.json.patch='{\"op\": \"replace\",\"path\": \"/Meshes/heat/Import/filename\",\"value\": \"{{platforms.{{machine.platform}}.input_dir}}/{{input_file_dependencies.mesh_json}}\" }'"

],

"additional_files":{

"parameterized_descriptions_filepath":"{{output_directory}}/{{instance}}/{{use_case_name}}/heat.information.adoc",

"custom_logs":[

"{{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.INFO",

"{{output_directory}}/{{instance}}/{{use_case_name}}/logs/{{executable}}.WARNING"

]

},

"scalability": {

"directory": "{{output_directory}}/{{instance}}/{{use_case_name}}",

"clean_directory":true,

"stages": [

{

"name": "Constructor",

"filepath": "heat.scalibility.HeatConstructor.data",

"format": "tsv"

},

{

"name": "PostProcessing",

"filepath": "heat.scalibility.HeatPostProcessing.data",

"format": "tsv"

},

{

"name": "Solve",

"filepath": "heat.scalibility.HeatSolve.data",

"format": "tsv",

"units":{

"*":"s",

"ksp-niter":"iter"

}

},

{

"name":"Outputs",

"filepath": "heat.measures/values.csv",

"format": "csv",

"units":{

"*":"W"

}

}

],

"custom_variables": [

{

"name":"Total",

"columns":["Constructor_init","Solve_solve","PostProcessing_exportResults" ],

"op":"sum",

"unit":"s"

}

]

},

"sanity": { "success": [], "error": [] },

"fixed":{

"solver":"gamg"

},

"parameters": [

{

"name":"tasks",

"sequence":[32,64,128]

},

{

"name":"mesh",

"sequence":["M1","M2"]

},

{

"name":"discretization",

"sequence":["P1","P2"]

},

{

"name":"memory",

"sequence":[100, 200, 300],

"conditions":{

"100": [ { "discretization": ["P1"], "mesh": ["M1"] } ],

"200": [ { "discretization": ["P1"], "mesh": ["M2"] } ],

"300": [ { "discretization": ["P2"] } ]

}

}

]

}1.8.1. Verification

Before launching the benchmarks, we want to ensure that everything is set up correctly. For this, we can use:

-

The

--listflag to list all tests that will be executed. -

The

--dry-runflag to verify that input dependencies are found, and to take a quick look to the generated SLURM scripts.

By running this command, we should see a total of 12 tests.

feelpp-benchmarking-exec -mc examples/ThermalBridges/karolina.json -bc examples/ThermalBridges/thermal_bridges.json --dry-run -rfm="-l -v"We can run it without the --list flag to generate SLURM scripts.

1.9. Plots

1.9.1. Measure validation

First, we are interested in seeing how computed measures (solutions) behave depending on the mesh refinement and the discretization used. What we expect to happen, is that the more refined the mesh is, and the bigger the FEM order parameter, the closer the solutions will be to the actual expected value.

Solution

{

"plots": [

{

"title": "Validation measures (Heat flux)",

"plot_types": [ "scatter" ],

"transformation": "performance",

"variables": [

"Outputs_Normal_Heat_Flux_alpha", "Outputs_Normal_Heat_Flux_beta", "Outputs_Normal_Heat_Flux_gamma"

],

"names": [

"Normal_Heat_Flux_alpha", "Normal_Heat_Flux_beta", "Normal_Heat_Flux_gamma"

],

"xaxis": {

"parameter": "mesh",

"label": "mesh levels"

},

"yaxis": {

"label": "Heat flow [W]"

},

"color_axis":{

"parameter": "discretization",

"label":"Discretization"

},

"secondary_axis":{

"parameter": "performance_variable",

"label": "Measures"

},

"aggregations":[ {"column":"tasks","agg":"max"} ]

}

]

}Solution

{

"title": "Validation measures (Temperatures)",

"plot_types": [ "scatter" ],

"transformation": "performance",

"variables": [

"Outputs_Points_alpha_max_field_temperature", "Outputs_Points_alpha_min_field_temperature",

"Outputs_Points_beta_max_field_temperature", "Outputs_Points_beta_min_field_temperature"

],

"names": [

"Points_alpha_max_field_temperature", "Points_alpha_min_field_temperature",

"Points_beta_max_field_temperature", "Points_beta_min_field_temperature"

],

"xaxis": {

"parameter": "mesh",

"label": "mesh levels"

},

"yaxis": {

"label": "Temperature [C°]"

},

"color_axis":{

"parameter": "discretization",

"label":"Discretization"

},

"secondary_axis":{

"parameter": "performance_variable",

"label": "Measures"

},

"aggregations":[ {"column":"tasks","agg":"max"} ]

}1.9.2. Analyzing execution times

Now, we want to compare the time taken by the different steps of the application, and see how they scale depending on the number of tasks they run on.

Solution

{

"title": "Performance (P1)",

"plot_types": [ "stacked_bar", "grouped_bar" ],

"transformation": "performance",

"variables": [

"Constructor_init",

"Solve_algebraic-assembly",

"Solve_algebraic-solve",

"PostProcessing_exportResults"

],

"names": [

"Preprocessing",

"Algebraic assembly",

"Algebraic solve",

"Postprocess"

],

"xaxis": {

"parameter": "tasks",

"label": "Number of tasks"

},

"secondary_axis": {

"parameter": "mesh",

"label": "Mesh level"

},

"yaxis": {

"label": "execution time (s)"

},

"color_axis":{

"parameter":"performance_variable",

"label":"Performance variable"

},

"aggregations":[

{"column":"performance_variable", "agg":"sum"},

{"column":"discretization","agg":"filter:P1"}

]

}Solution

{

"title": "Speedup (P2)",

"plot_types": [ "scatter" ],

"transformation": "speedup",

"variables": [

"Constructor_init",

"Solve_algebraic-assembly",

"Solve_algebraic-solve",

"PostProcessing_exportResults",

"Total"

],

"names": [

"Preprocessing",

"Algebraic assembly",

"Algebraic solve",

"Postprocess",

"Total"

],

"xaxis": {

"parameter": "tasks",

"label": "Number of tasks"

},

"secondary_axis": {

"parameter": "mesh",

"label": "Mesh level"

},

"yaxis": {

"label": "Speedup"

},

"color_axis":{

"parameter":"performance_variable",

"label":"Performance variable"

},

"aggregations":[

{"column":"discretization","agg":"filter:P2"}

]

}1.9.3. Other performance variables

Solution

{

"title":"Number of iterations of GMRES",

"plot_types":["scatter"],

"transformation":"performance",

"variables":["Solve_ksp-niter"],

"names":[],

"xaxis":{

"parameter":"tasks",

"label":"Number of tasks"

},

"yaxis":{

"label":"Number of iterations"

},

"color_axis":{

"parameter":"mesh",

"label":"Mesh"

},

"secondary_axis":{

"parameter":"discretization",

"label":"Discretization"

},

"aggregations":[

{"column":"performance_variable","agg":"filter:Solve_ksp-niter"}

]

}1.9.4. Multi-parameter figures (optional)

We sometimes want to have all of the benchmarked information shown in the same figure. But this is quite difficult to do in a simple line plot if we have more than 2 parameters. For this, feelpp.benchmarking support some multi-parametric figures, such as surface and 3D scatter plots, parallelcoordinates plots and sunburst charts. These, take an additional field on the plot configuration: the extra_axes field.

Solution

{

"title": "Parameters",

"plot_types": [ "parallelcoordinates" ],

"transformation": "performance",

"variables": ["Constructor_init", "Solve_solve", "PostProcessing_exportResults" ],

"xaxis": { "parameter": "tasks", "label": "Tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh" },

"yaxis": { "label": "execution time (s)"},

"color_axis": {"parameter":"performance_variable", "label":"Step"},

"extra_axes": [ { "parameter": "discretization", "label": "Discretization" }]

}Solution

{

"title": "Application Performance",

"plot_types": [ "sunburst","scatter3d" ],

"transformation": "performance",

"variables": ["Constructor_init", "Solve_solve", "PostProcessing_exportResults" ],

"xaxis": { "parameter": "tasks", "label": "Tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh" },

"yaxis": { "label": "execution time (s)"},

"color_axis": {"parameter":"performance_variable", "label":"Step"},

"extra_axes": [ { "parameter": "discretization", "label": "Discretization" }]

}Complete plot configuration

{

"plots": [

{

"title": "Validation measures (Heat flux)",

"plot_types": [ "scatter" ],

"transformation": "performance",

"variables": [ "Outputs_Normal_Heat_Flux_alpha", "Outputs_Normal_Heat_Flux_beta", "Outputs_Normal_Heat_Flux_gamma" ],

"names": [ "Normal_Heat_Flux_alpha", "Normal_Heat_Flux_beta", "Normal_Heat_Flux_gamma" ],

"xaxis": { "parameter": "mesh", "label": "mesh levels" },

"yaxis": { "label": "Heat flow [W]" },

"color_axis":{ "parameter": "discretization", "label":"Discretization" },

"secondary_axis":{ "parameter": "performance_variable", "label": "Measures" },

"aggregations":[ {"column":"tasks","agg":"max"} ]

},

{

"title": "Validation measures (Temperatures)",

"plot_types": [ "scatter" ],

"transformation": "performance",

"variables": [ "Outputs_Points_alpha_max_field_temperature", "Outputs_Points_alpha_min_field_temperature", "Outputs_Points_beta_max_field_temperature", "Outputs_Points_beta_min_field_temperature" ],

"names": [ "Points_alpha_max_field_temperature", "Points_alpha_min_field_temperature", "Points_beta_max_field_temperature", "Points_beta_min_field_temperature" ],

"xaxis": { "parameter": "mesh", "label": "mesh levels" },

"yaxis": { "label": "Temperature [C°]" },

"color_axis":{ "parameter": "discretization", "label":"Discretization" },

"secondary_axis":{ "parameter": "performance_variable", "label": "Measures" },

"aggregations":[ {"column":"tasks","agg":"max"} ]

},

{

"title": "Performance (P1)",

"plot_types": [ "stacked_bar", "grouped_bar" ],

"transformation": "performance",

"variables": [ "Constructor_init", "Solve_algebraic-assembly", "Solve_algebraic-solve", "PostProcessing_exportResults" ],

"names": [ "Preprocessing", "Algebraic assembly", "Algebraic solve", "Postprocess" ],

"xaxis": { "parameter": "tasks", "label": "Number of tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh level" },

"yaxis": { "label": "execution time (s)" },

"color_axis":{ "parameter":"performance_variable", "label":"Performance variable" },

"aggregations":[

{"column":"performance_variable", "agg":"sum"},

{"column":"discretization","agg":"filter:P1"}

]

},

{

"title": "Speedup (P2)",

"plot_types": [ "scatter" ],

"transformation": "speedup",

"variables": [ "Constructor_init", "Solve_algebraic-assembly", "Solve_algebraic-solve", "PostProcessing_exportResults", "Total" ],

"names": [ "Preprocessing", "Algebraic assembly", "Algebraic solve", "Postprocess", "Total" ],

"xaxis": { "parameter": "tasks", "label": "Number of tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh level" },

"yaxis": { "label": "Speedup" },

"color_axis":{ "parameter":"performance_variable", "label":"Performance variable" },

"aggregations":[

{"column":"discretization","agg":"filter:P2"}

]

},

{

"title":"Number of iterations of GMRES",

"plot_types":["scatter"],

"transformation":"performance",

"variables":["Solve_ksp-niter"],

"names":[],

"xaxis":{ "parameter":"tasks", "label":"Number of tasks" },

"yaxis":{ "label":"Number of iterations" },

"color_axis":{ "parameter":"mesh", "label":"Mesh" },

"secondary_axis":{ "parameter":"discretization", "label":"Discretization" },

"aggregations":[

{"column":"performance_variable","agg":"filter:Solve_ksp-niter"}

]

},

{

"title": "Parameters",

"plot_types": [ "parallelcoordinates" ],

"transformation": "performance",

"variables": ["Constructor_init", "Solve_solve", "PostProcessing_exportResults" ],

"xaxis": { "parameter": "tasks", "label": "Tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh" },

"yaxis": { "label": "execution time (s)"},

"color_axis": {"parameter":"performance_variable", "label":"Step"},

"extra_axes": [ { "parameter": "discretization", "label": "Discretization" }]

},

{

"title": "Application Performance",

"plot_types": [ "sunburst","scatter3d" ],

"transformation": "performance",

"variables": ["Constructor_init", "Solve_solve", "PostProcessing_exportResults" ],

"xaxis": { "parameter": "tasks", "label": "Tasks" },

"secondary_axis": { "parameter": "mesh", "label": "Mesh" },

"yaxis": { "label": "execution time (s)"},

"color_axis": {"parameter":"performance_variable", "label":"Step"},

"extra_axes": [ { "parameter": "discretization", "label": "Discretization" }]

}

]

} .pdf

.pdf