Website persistance and aggregating results

The framework allows users to create a dashboard that aggregates results from multiple benchmarks. This is useful for comparing different machines, applications, or use cases.

1. The dashboard configuration file

Whenever a benchmark is done, a file called website_config.json is created inside the directory specified under reports_base_dir on the machine specification file.

If this file already exists from a previous benchmark, it will be updated to include the latest results. For example, when a benchmark runs on a new system.

This file describes the dashboard hierarchy and where to find the benchmark results for each case/machine/application.

Each website configuration file corresponds exactly to one dashboard, so users can have multiple dashboards by defining multiple website configuration files.

The file is structured as follows:

{

"execution_mapping":{

"my_application":{

"my_machine":{

"my_usecase": {

"platform": "local", //Or "girder"

"path": "./path/to/use_case/results" //Directory containing reports for a single machine-application-usecase combination

}

}

}

},

"machines":{

"my_machine":{

"display_name": "My Machine",

"description": "A description of the machine",

}

},

"applications":{

"my_application":{

"display_name": "My Application",

"description": "A description of the application",

"main_variables":[] //Used for aggregations

}

},

"use_cases":{

"my_usecase":{

"display_name": "My Use Case",

"description": "A description of the use case",

}

}

}The execution_mapping field describes the hierarchy of the dashboard. That is, which applications where run on which machines and for which use cases. The path field supports having reports stored on remote locations, such as Girder.

The machines, applications, and use_cases fields define the display names and descriptions for each machine, application, and use case, respectively.

2. Aggregating results

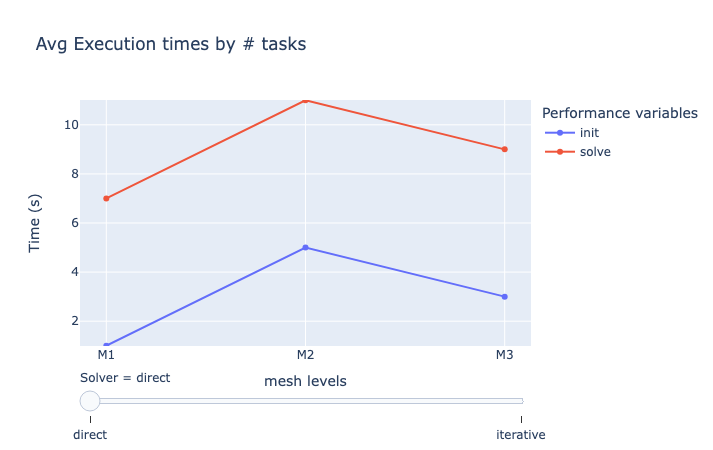

If our benchmarks contain more than 2 parameters, it can be difficult to visualize results on a single figure. The framework allows users to aggregate results by reducing the number of dimensions in the data. In addition, the framework indexes reports based on their date, the system they where executed on, the platform and environment, as well as the application and use case benchmarked.

2.1. The aggregations field on Figure Specifications

In order to aggregate data (and reduce the number of dimensions), the aggregations field can be used on the figure specification file. This field is a list of dictionaries, each containing the fields column and agg, describing the column/parameter to aggregate and the operation to perform, respectively.

Available aggregation operations are:

-

mean: The mean of the values -

sum: The sum of the values -

min: The minimum value -

max: The maximum value -

filter:value: Filters the data based on the value of the column

2.2. Overview configuration file

It is possible to create overview pages that show the performance of a group of benchmarks. This is useful for comparing different machines, applications, or use cases.

Each perforamnce value, for all reports, is indexed by:

-

The parameter space

-

The variable name

-

The benchmark date

-

The system where the benchmark was executed

-

The platform where the benchmark was executed

-

The environment where the benchmark was executed

-

The application

-

The use case

Depending on the dashboard page you are located, benchmarks are filtered by default. For example, if you are in the machine page, only benchmarks for that machine will be shown.

Accessible column names:

-

environment

-

platform

-

result

-

machine

-

usecase

-

date

The dashboard administrator can define the overview configuration file, which is a JSON file that describes the figures to be displayed on each overview page.

This overview configuration file is currently too extensive and verbose and needs to be simplified, so it will not be treated in this course. However, be aware that it is possible to create overview pages that show the performance of a group of benchmarks.

.pdf

.pdf